Category: AI and machine learning

News & events

-

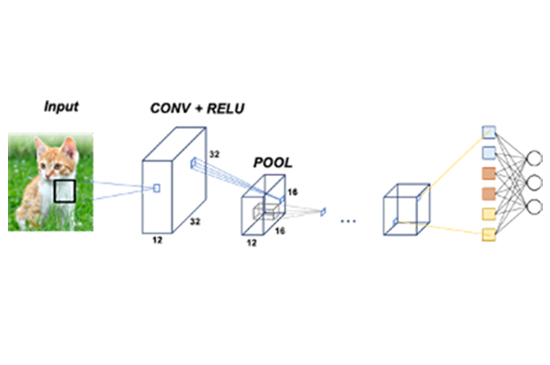

Deep neural architectures

Since the development of the first real deep neural network AlexNet in 2012, deep learning…

-

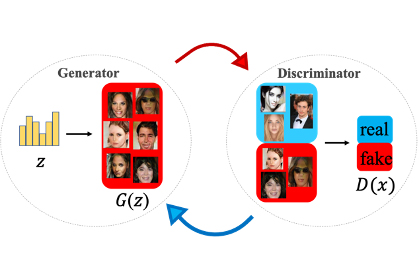

Generative adversarial networks

Generative Adversarial Networks (GANs) were called as the most interesting idea in the last 10…

-

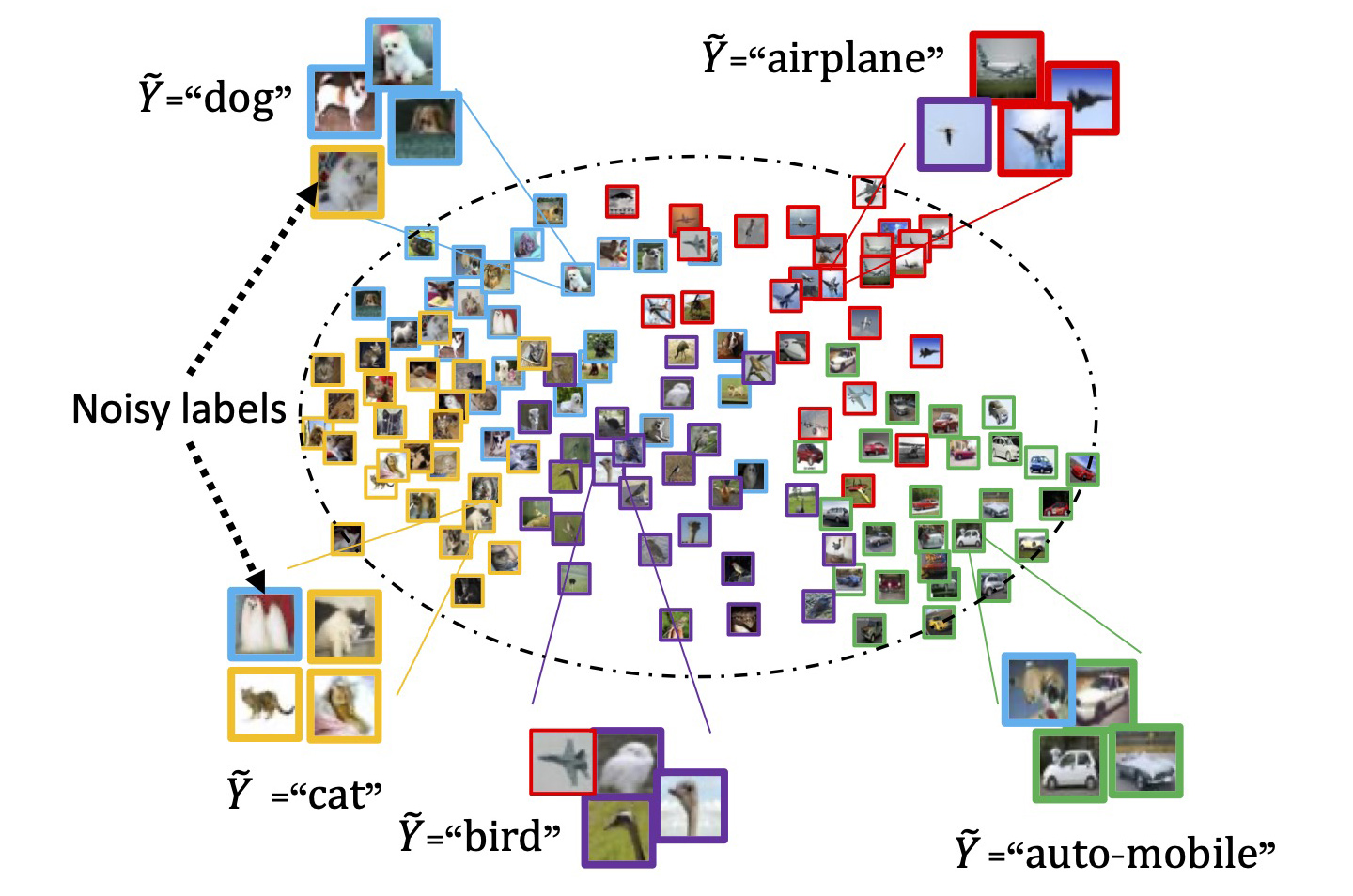

Label-noise learning

Learning with noisy labels becomes a more and more important topic recently. The reason is…

-

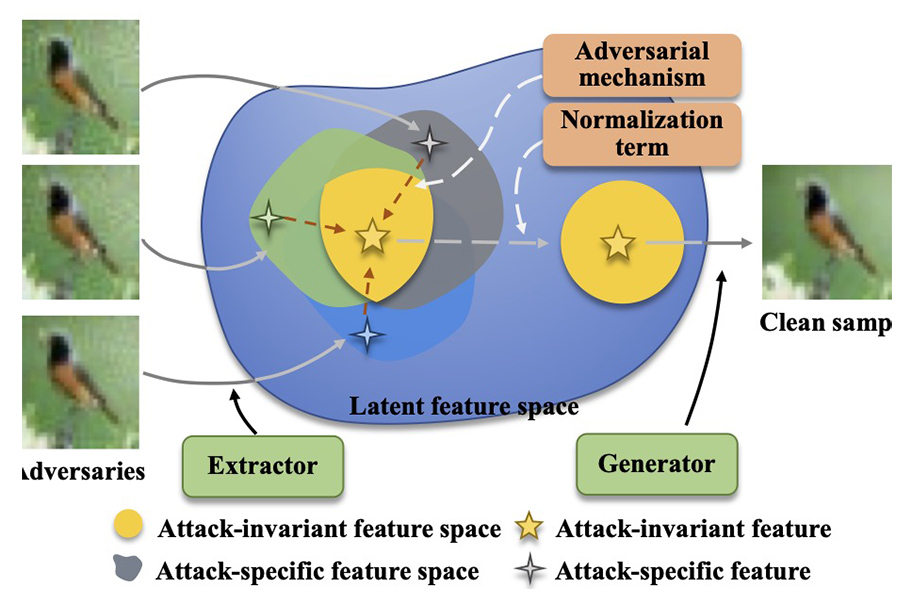

Robust/adversarial learning

We are also interested in how to reduce the side effect of noise on the…

-

Statistical (deep) learning theory

Deep learning algorithms have given exciting performances, e.g., painting pictures, beating Go champions, and autonomously…

-

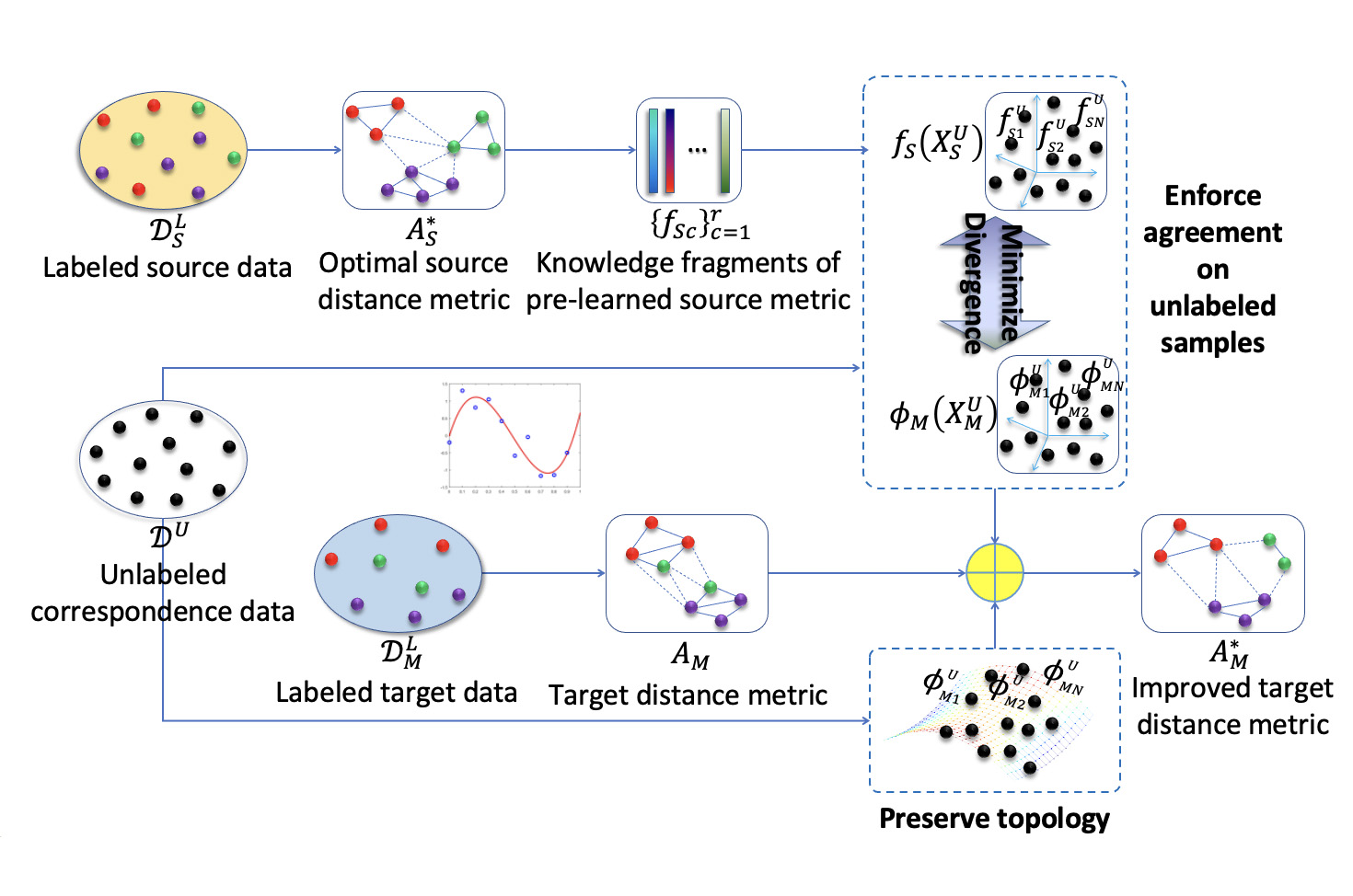

Transfer learning

Just like human, machine can also find the common knowledge between tasks and transfer the…